My project was built in collaboration with Abby Lee and Tianxu Zhao. Together we built “Fireflies”, a concentration game utilizing a Muse EEG, p5 animation visible through a projector, and a Bluetooth enabled jar trigger. The process would be that once our user opens the jar, our animation of fireflies will appear. When our user is prompted to focus, the Muse will transmit that our user is in a state of concentration, prompting the flies in our animation to concentrate in conjunction. We see this game as an interesting jumping-off point for many neuroscience-related conversations.

I have had laboratory experience working with an electroencephalogram, which read the presence and absence of polarized oxygenated blood as electrical frequencies. Different types of frequencies, also known as neural oscillations, occur simultaneously all over the brain, and their functions vary depending on which region of the brain they take place in. We want to be clear with our audience – Muse is not a scientific apparatus. In fact, the company misrepresents many of the types of data the EEG reads and the inaccuracy of that data. That being said, Muse is capable of reading very localized, very simple frequency and amplitude changes that are understood to be associated with the presence and absence of concentration. Concentration in it of itself is not an entirely well-understood phenomenon. However, at a reductive level that reflects the Muse’s limitations, our animation is contingent on a change from lower frequency, lower amplitude oscillatory behavior (usually referred to as alpha) which is commonly associated with resting state, to a higher frequency, higher amplitude neural oscillatory behavior (usually referred to as beta) which is commonly associated with concentration.

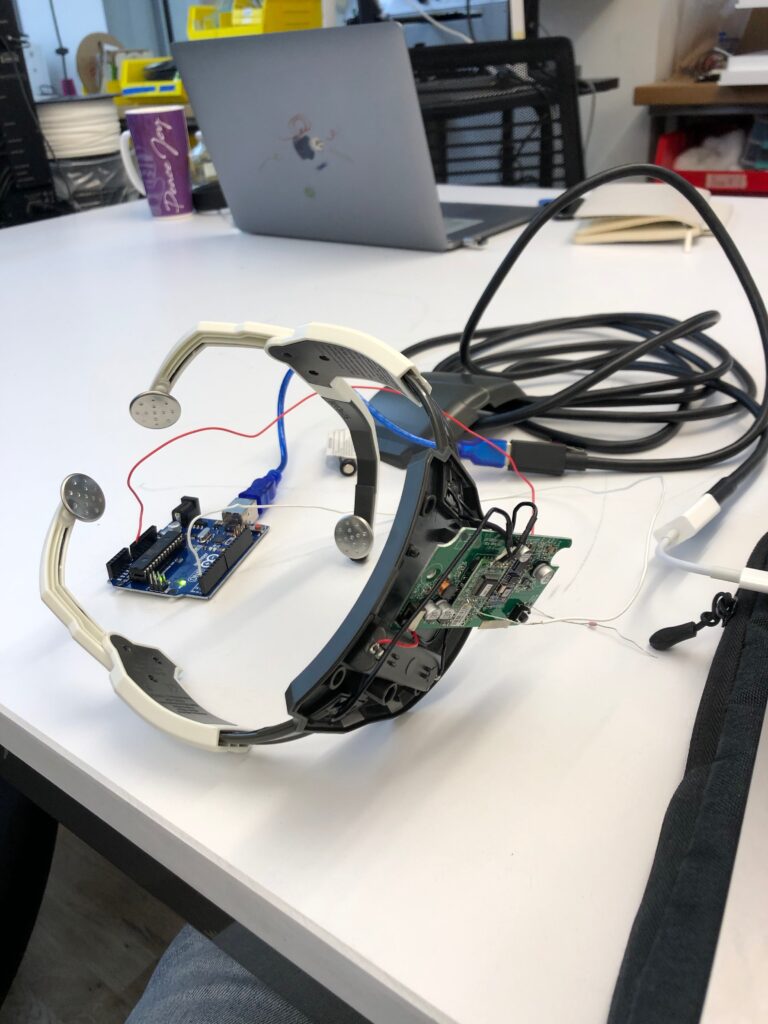

We integrated our Muse to our p5 animation through a series of steps. First, we read the Muse EEG data with a third party mobile application titled “Muse Monitor” on one of our phones. Our phone then transmits the live EEG data using Open Sound Control protocol to a processing sketch. We can then input the OSC feedback from our processing sketch to our p5 animation locally.

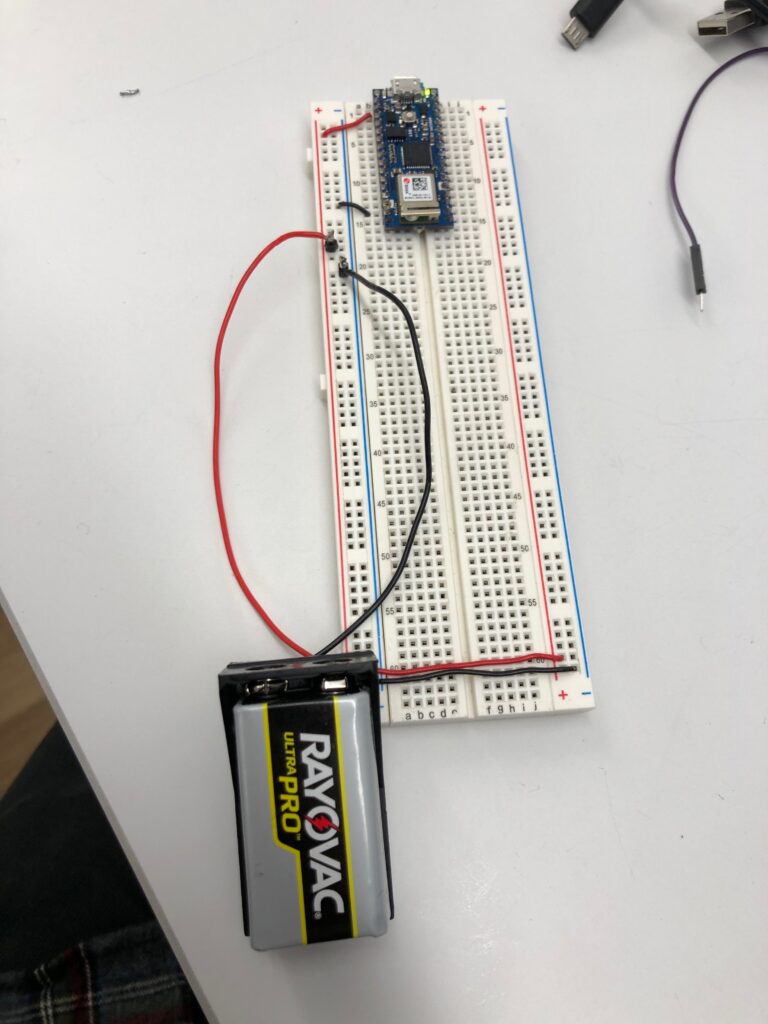

We connect our jar to our animation using an Arduino Nano, which has a built-in Bluetooth transmitter. We have programmed this Nano with a proprietary Bluetooth transmission library, capable of easily interfacing with our local p5 animation. Our Nano is attached to a button, which is triggered by the removal of the jar’s lid.

The game plan is that when the lid of our jar is removed, our p5 animation will play, allowing the Muse EEG feedback to be represented. Once the lid of the jar is replaced, the animation stops. To be clear – the Muse and jar operate independently of one another.

Ultimately my group learned how to take Muse data and use it in a p5 sketch. We learned how to connect an Arduino to a computer via Bluetooth. We discovered that the delays created by Neopixel code can interfere with Bluetooth signal transmission. We broke a few Arduino Nanos trying to figure out an optimal long-lasting power source for our jar.

Special thanks to:

Jeff Feddersen, our Physical Computing professor, without whom we could not have realized this project, let alone use a multimeter.

Louise Lessel for pointing us to Gene Kogan’s OSC processing library on GitHub, and being an approachable and accessible wealth of Muse knowledge.

Tom Igoe for teaching us how to connect devices with Bluetooth.

Residents Jesse Simpson, Aiden Nelson, Ellen Nickles, and Anthony Bui for their help in troubleshooting.