For my final project in Hello Computer, I redeveloped my Personal Check-In Bot concept into a more casual, and ethical Check in Buddy. Check in Buddy was inspired by my observation that since the pandemic’s commencement, the majority of my conversations begin with people asking me how I am, regardless of our level of familiarity. In writing Check in Buddy’s dialog, I attempted to insert my own personality, using personal colloquialisms and humor instead of my initially dry and formal tone. Below, I have demonstrated three potential Check in Buddy dialog pathways triggered by sentiment analysis that assess “good” “ok” or “bad” mood inputs.

Technical Issues Faced

1. YouTube API

As you can see at the end of my “OK” input video, I intended to add a feature that would auto-play audio from a list of trending comedy videos from YouTube. I followed this documentation however, YouTube has since updated its API and I could not figure out how to adapt this sample code with the API’s new syntax.

If you are a more experienced, or perseverant programmer than me, go for it!

2. Google’s Dialogflow Phone Gateway

After giving up on the YouTube API, I decided to try my hand at integrating Check in Buddy with Google’s Phone Gateway feature. This meant that in order to contact buddy, users would have to call a phone number. Google’s phone gateway made it easy to integrate some fun features, including one that served the same function as the aforementioned YouTube API, and some unethical features, which I will discuss later. I ended up taking Check in Buddy out of Phone Gateway because in the process of delivering sentiment analysis results from Firebase, to Dialogflow, and then to the Phone Gateway, these results become illegible to the Phone Gateway and breaks the bot. Despite some creative programmatic assistance from Nicole He (my professor), I nixed the Phone Gateway feature, opting instead to focus on Check in Buddy’s character concept.

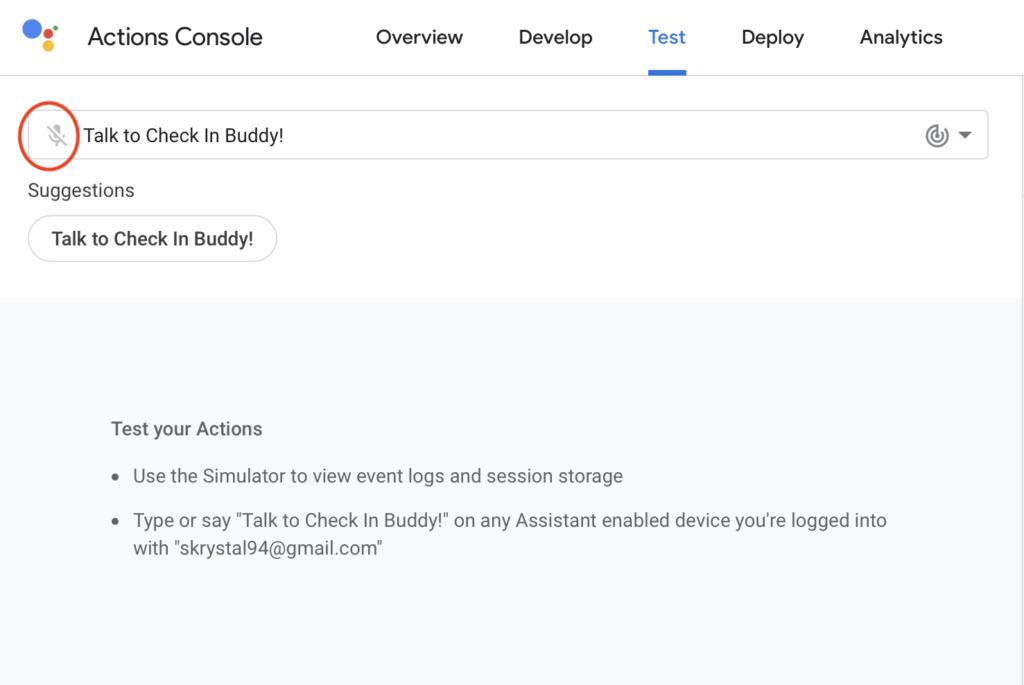

3. Google’s Action Console Hates my Microphone

Google’s Action Console won’t recognize my microphone anymore. Nobody knows why. For better or worse, the only way I can talk to Check in Buddy is through the iOS Google Assistant App.

I’ll keep searching for an explanation.

Ethical Issue Faced

What kinds of advice and resources are AI equipped to give responsibly?

AI is decidedly not equipped to dispense medical advice with the same level of nuance as a trained human professional. To that end, I stayed away from implementing an automated “diagnostic” feature in response to a “bad” sentiment analysis input.

However, in integrating Google’s Dialogflow Phone gateway, I lost sight of why I was building Check in Buddy. I focused more on integrating features that could direct users to a suicide or substance abuse help-line, instead of asking myself why I should have included these features to begin with (I have since taken these features out). Redirecting users to a suicide or substance abuse help-line is ethically dubious for (at least) two reasons. First, Check in Buddy was built on a Google platform, therefore, Google could have access to personal and sensitive data on a user’s propensity for suicide and/or substance abuse, out of a seemingly innocuous conversation with my bot – users may not want that, and I had not given them an opportunity to consent to this disclosure. Second, Check in Buddy was engaging in behavior that I would not delve into myself. Were I concerned about a friend’s mental well-being, I would have a direct conversation with them, not a flippant check-in, where I could offer substantive support. Check in Buddy is neither a user’s friend, nor can it offer proper support. I adapted Check in Buddy’s behavior to be less about dispensing information, and more about receiving it – I believe this to be a more ethical “support” power dynamic between user and bot.

I credit Nicole He for suggesting I think critically about these features, and regret my technochauvanistic process. Were I to have given myself more time to play test Check in Buddy with my peers, I would have hoped to have corrected this ethical misstep sooner.

Next Steps

I received two pieces of feedback that will direct how I move forward with Check in Buddy:

Irene Alvarado noted that because I had inserted my personality into Check in Buddy’s dialogue, my interactions with Check in Buddy could serve a purpose similar to checking in on myself. This insight resonated with me, were I to develop Check in Buddy into a more personal, frequently used bot, I would first want to strip Google, or any other data collecting entity out of my buddy so that I could engage it more personally.

Everest Pipkin suggested I play the game Eliza, which addresses the ethical topics that I wrestled with; what is reasonable for artificial intelligence to suggest in a support setting. Before I develop Check in Buddy’s personality further, I’ll play this game.